From Automation to Autonomy

The enterprise is at a critical inflection point. The era of simple generative AI experiments (chatbots, summarization tools) is giving way to a far more ambitious goal: deploying autonomous AI agents that can reason, plan, and execute entire business processes.

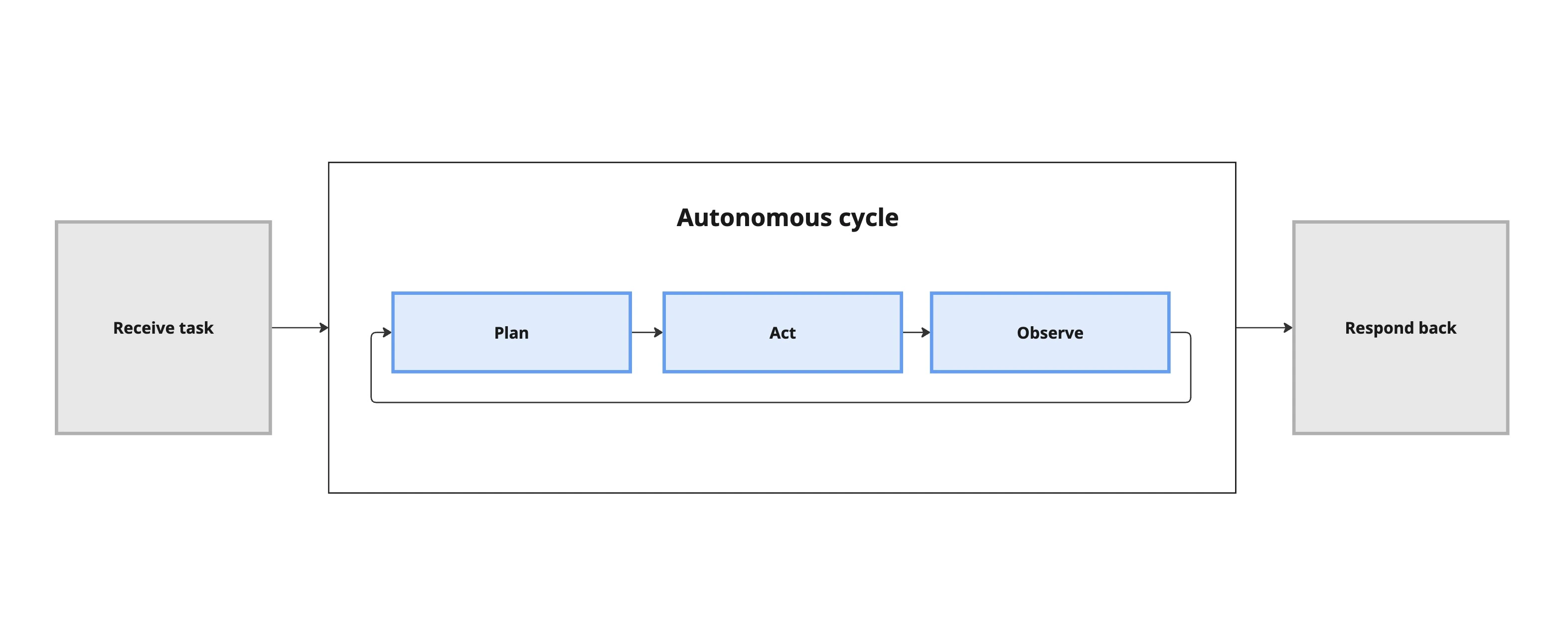

This is a fundamental shift in capability. Traditional automation, like Robotic Process Automation (RPA), follows a rigid script. It's brittle. An AI agent, in contrast, operates within a dynamic loop: it plans a course of action, acts on that plan using available tools, observes the outcome, and course-corrects as needed. This is the difference between a player piano and a jazz improviser.

But building and deploying these autonomous agents, especially within the unforgiving context of regulated industries like finance and healthcare, is not a simple technical upgrade. It is a production challenge. As product leaders, we must move beyond the hype of agentic capabilities and confront the five critical, and often underestimated, hurdles to making them enterprise-ready.

1. From Tool Use to Tool Mastery

An agent's power is proportional to the tools it can use, but each tool is also a new vector for failure. The strategic challenge isn't just connecting to an API; it's architecting a reliable and secure "tool chest."

This requires a level of engineering rigor far beyond a simple integration. We must create precise definitions for each tool, specifying valid parameter ranges and expected outputs. We need to build validation layers that act as a safety harness, preventing the agent from misusing a tool in a way that could corrupt data or trigger a downstream error. The smart strategy is to start with a small, highly curated set of well-defined tools and expand carefully, rather than handing the agent a large, loosely defined toolkit.

2. From Probability to Predictability

The unpredictable nature of LLMs is a feature in creative applications but a problem in the enterprise. A system that follows explicit rules is predictable. An agent that makes decisions based on probability distributions is not. When an agent is approving a loan or determining a patient's treatment plan, "creative" and "unpredictable" are not desirable attributes.

Our job is to engineer predictability into this uncertain system. This involves implementing structured reasoning frameworks, like the ReAct (Reason and Act) model, which force the agent to "show its work" and follow a more systematic logic. It also means carefully tuning parameters like LLM temperature. A low temperature (near 0) constrains the model, ensuring more consistent, reliable outputs for high-stakes decisions. We must actively trade some of the model's creative potential for the consistency that enterprise workflows demand.

3. From Single Tasks to End-to-End Processes

Enterprise workflows are not separate, single-step tasks. They are complex, multi-step processes that can span days and involve multiple systems. An agent managing a supply chain disruption, for example, must track progress, understand dependencies, and handle failures at any point without losing its place.

This requires a sophisticated approach to state management and error handling. We must design robust systems that serve as the agent's memory, allowing it to pause and resume complex tasks. We need clear validation checkpoints at each step of a workflow. Crucially, we must design intelligent fallback mechanisms. For example, if a predictive maintenance agent fails to access three different failure-log databases, its fallback should not be to simply give up. It should be programmed to escalate to a human operator with a complete summary of what it tried and where it failed.

4. From Plausibility to Verifiable Truth

An agent that generates plausible but incorrect information (a hallucination) is the single greatest threat to enterprise trust. In regulated environments where accuracy is non-negotiable, this risk is serious.

Building a trustworthy agent requires a multi-layered defense against this. The foundation is grounding. By using Retrieval-Augmented Generation (RAG), we force the agent to base its reasoning on specific, verifiable information from a trusted, private knowledge source. We must then require the agent to provide citations for its claims, linking back to the source documents. For the most critical decisions, we must design human-in-the-loop workflows, where the agent's proposed action is automatically routed to a human expert for review and approval. Trust is not an inherent property of an AI model; it is a feature that must be carefully designed and engineered.

5. From Demo Scale to Enterprise Scale

An agent that performs flawlessly in a controlled demo can shatter under the weight of a high-traffic production environment. Cascading failures from tool timeouts, resource bottlenecks from model inference, and incorrect responses at scale can severely degrade system performance.

A production-ready product strategy must anticipate these challenges from day one. This means architecting for resilience. We need to implement robust error handling at every integration point, using patterns like circuit breakers to prevent one failing service from bringing down the entire system. We must build retry mechanisms with exponential backoff for temporary failures. And we need sophisticated LLMOps and monitoring tools to track metrics like tool timeout rates and response accuracy, allowing us to identify and address bottlenecks before they impact users.

Conclusion

The transition to autonomous AI agents is not a simple technological evolution; it is a test of an organization's product and engineering discipline. The companies that will lead in this new era will not be those with the most powerful models, but those with the most robust and resilient systems.